Containerization has revolutionized the way software applications are developed, deployed, and managed. Kubernetes, an open-source container orchestration platform, has emerged as the de facto standard for managing containerized applications at scale.

The Kubernetes Architecture

Kubernetes is built around a cluster of nodes that work together to form a highly available and scalable platform. The key components of a Kubernetes cluster include:

1. Master Node

The master node acts as the control plane for the cluster. It manages and coordinates the overall cluster operations, including scheduling containers, scaling applications, and maintaining cluster state.

2. Worker Nodes

Worker nodes, also known as minions, are the worker machines where containers are deployed and executed. They run the necessary components to communicate with the master node and execute the assigned tasks.

3. Pods

Pods are the smallest and most fundamental unit in Kubernetes. A pod represents a single instance of a running process in the cluster. It can contain one or more tightly coupled containers that share the same network and storage resources.

4. ReplicaSets

ReplicaSets ensure the desired number of pod replicas are running at all times. They provide scalability and fault tolerance by automatically creating or removing pod replicas based on defined rules.

5. Services

Services define a stable network endpoint for accessing a group of pods. They enable load balancing and service discovery within the cluster, allowing applications to communicate with each other seamlessly.

6. Volumes

Volumes provide persistent storage for containers running in the cluster. They allow data to persist even when containers are restarted or moved between nodes.

How to Set Up a Kubernetes Cluster

Setting up a Kubernetes cluster involves several steps. Here's an overview of the process:

1. Infrastructure Provisioning

Choose a cloud provider or set up your own infrastructure to host the Kubernetes cluster. Popular cloud providers such as AWS, Google Cloud Platform (GCP), and Azure offer managed Kubernetes services. Alternatively, you can set up Kubernetes on bare-metal or virtual machines.

2. Install Kubernetes

Install the Kubernetes software components on the master and worker nodes. This can be done using various installation methods such as kubeadm, kops, or by using managed Kubernetes services.

3. Configure Networking

Set up a networking solution that allows communication between the nodes in the cluster. Kubernetes supports various networking plugins, such as Calico, Flannel, and Weave, which enable container-to-container communication.

4. Join Worker Nodes

Join the worker nodes to the cluster by running a command provided by the Kubernetes installation tool. This connects the worker nodes to the control plane managed by the master node.

5. Test Cluster Connectivity

Ensure that the nodes are connected and communicating with each other correctly. Verify that you can deploy and manage pods on the cluster.

Deploying Containerized Applications

Once the Kubernetes cluster is set up, deploying containerized applications becomes straightforward. Kubernetes provides multiple options for deploying applications:

1. Using Deployments

Deployments are the recommended way to manage the lifecycle of applications in Kubernetes. They provide declarative definitions for creating and updating sets of pods, including scaling and rolling updates.

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-deployment

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app-container

image: my-app-image:latest

ports:

- containerPort: 8080

The yml config file creates a Deployment named my-app-deployment with 3 replicas. It specifies a pod template with a container named my-app-container running the my-app-image Docker image on port 8080

apiVersion: v1

kind: Service

metadata:

name: my-app-service

spec:

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: LoadBalancer

The yml config file creates a Service named my-app-service. It selects pods with the label app: my-app and exposes port 80 externally, which forwards traffic to port 8080 on the selected pods. The service type is set to LoadBalancer, allowing external access.

2. Using Pods

Pods can be directly created using YAML or JSON manifests. However, managing individual pods is less common and not recommended unless you have specific use cases that require direct control over pod scheduling and lifecycle.

3. Using Helm

Helm is a package manager for Kubernetes that simplifies the deployment and management of applications. It allows you to define reusable application templates, called charts, and install them on a Kubernetes cluster.

Managing Application Lifecycle with Kubernetes

Kubernetes provides various features to manage the lifecycle of containerized applications efficiently. Let's take a look at a few of them.

1. Health Probes

Kubernetes supports liveness and readiness probes to determine the health of containers. Liveness probes check if the container is running correctly, while readiness probes determine if the container is ready to accept traffic.

2. Rolling Updates

Kubernetes supports rolling updates to seamlessly update an application to a new version without downtime. It gradually replaces old pods with new ones, ensuring a smooth transition.

3. Rollbacks

In case an application update introduces issues, Kubernetes allows you to roll back to a previous version. This helps mitigate the impact of faulty deployments and ensures the availability of the application.

4. Jobs and CronJobs

Kubernetes supports running batch jobs and scheduled tasks through Jobs and CronJobs, respectively. These features are useful for running one-off or periodic tasks within the cluster.

Scaling and Load Balancing with Kubernetes

Kubernetes provides robust scaling and load-balancing mechanisms to handle varying application workloads:

Scaling a deployment with the kubectl command

kubectl scale deployment my-app-deployment --replicas=5

1. Horizontal Pod Autoscaling (HPA)

HPA automatically scales the number of pod replicas based on CPU utilization, memory consumption, or custom metrics. It ensures that applications have the necessary resources to handle increased traffic.

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: my-app-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app-deployment

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

The yml code above creates an HPA named my-app-hpa for the deployment my-app-deployment. It defines the minimum and maximum number of replicas as well as the metric to scale based on (CPU utilization in this case).

2. Service Load Balancing

Kubernetes services distribute traffic evenly among the pod replicas of an application. This load-balancing functionality allows applications to scale horizontally and handle high-traffic loads efficiently.

3. Cluster Autoscaling

Cluster Autoscaling automatically adjusts the size of the Kubernetes cluster based on resource demands. It scales up or down the number of worker nodes to ensure optimal resource utilization.

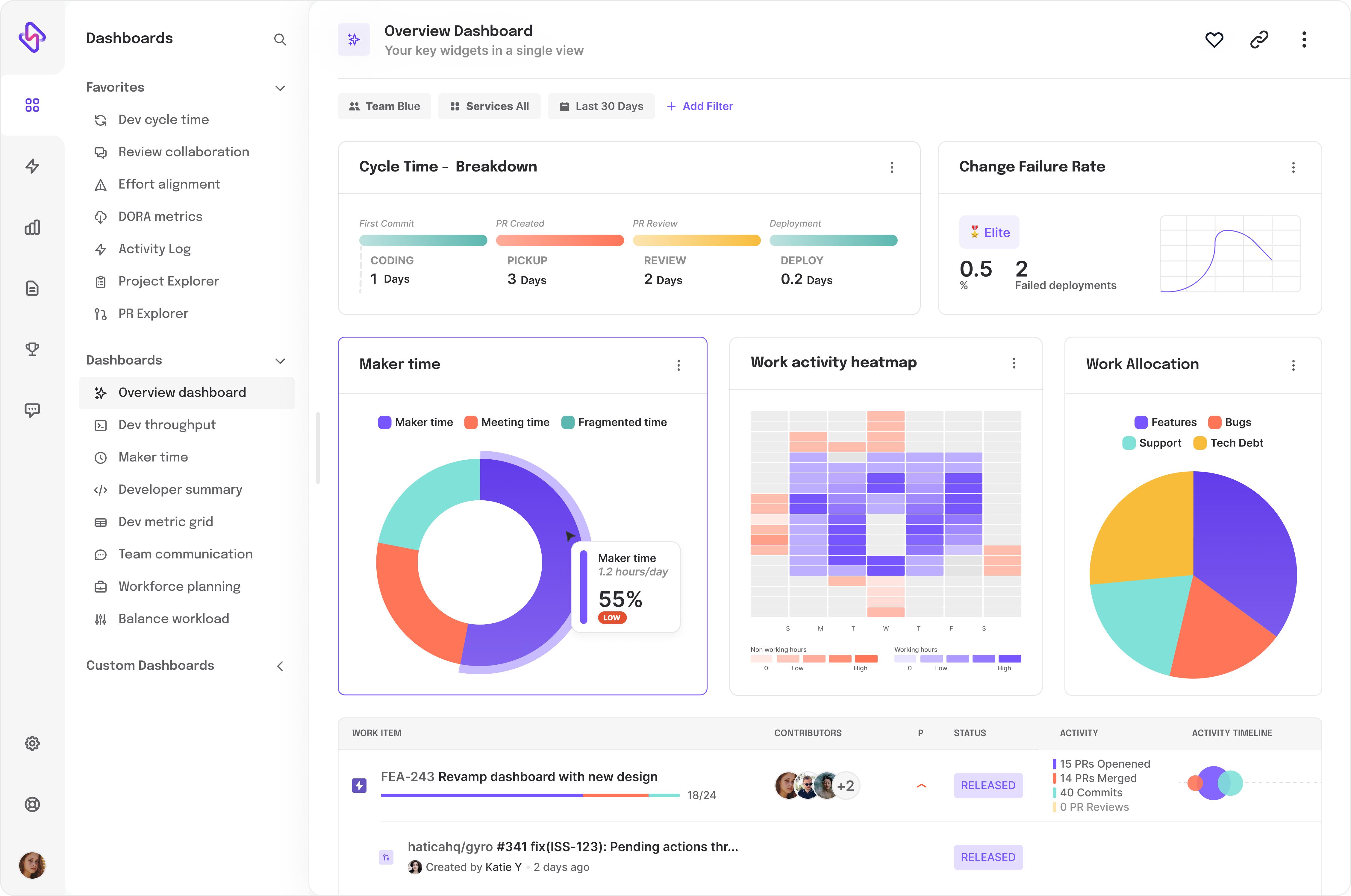

Monitoring and Logging of Application Running on Kubernetes

Monitoring and logging are crucial for understanding the health and performance of applications running on Kubernetes:

1. Prometheus

Prometheus is a popular open-source monitoring and alerting toolkit widely used in the Kubernetes ecosystem. It provides extensive metrics collection capabilities and integrates well with Kubernetes through the Prometheus Operator.

Elasticsearch and Fluentd

Elasticsearch and Fluentd (EFK) stack is commonly used for log aggregation and analysis in Kubernetes. Fluentd collects logs from different sources, including containers, and forwards them to Elasticsearch for indexing and searching.

3. Container Runtime Monitoring

Kubernetes integrates with container runtimes, such as Docker or containerd, to collect low-level container metrics. These metrics help monitor resource usage, container health, and performance.

High Availability and Fault Tolerance

To ensure high availability and fault tolerance, Kubernetes provides several features:

1. Replication and Pod Anti-Affinity

Kubernetes allows you to specify pod anti-affinity rules to ensure that replicas of an application are not scheduled on the same node. This increases fault tolerance by preventing a single point of failure.

2. Cluster-Level Fault Tolerance

Kubernetes clusters can be made fault-tolerant by running multiple control plane components, such as multiple master nodes or etcd replicas. This ensures the cluster can recover from control plane failures without significant downtime.

3. Persistent Volumes and StatefulSets

StatefulSets enable the management of stateful applications in Kubernetes. They ensure stable network identities and persistent storage for pods, making it easier to run databases and other stateful workloads.

Project-Based Example For Managing Containerized Applications with Kubernetes

For the sample project, we are using a node express app, and Mongodb for the database that allows users in a particular community to resell used commodities.

Here’s our simple schema:

const mongoose = require('mongoose');

const userSchema = new mongoose.Schema({

userId: {

type: String,

required: true,

unique: true

},

role: {

type: String,

enum: ['user', 'admin'],

default: 'user'

},

firstName: {

type: String,

required: true

},

lastName: {

type: String,

required: true

},

location: {

type: String,

required: true

},

collections: [{

type: mongoose.Schema.Types.ObjectId,

ref: 'Item'

}]

});

module.exports = mongoose.model('User', userSchema);

The admin schema

const mongoose = require('mongoose');

const adminSchema = new mongoose.Schema({

adminId: {

type: String,

required: true,

unique: true

},

firstName: {

type: String,

required: true

},

lastName: {

type: String,

required: true

}

});

module.exports = mongoose.model('Admin', adminSchema);Handle the routes, authentication, and session management. We proceed to build our Kubernetes cluster locally.

Install minikube if you do not have one installed already

minikube start --driver=<hypervisor>

Replace <hypervisor> with the name of the hypervisor you installed (e.g., virtualbox, kvm2, hyperkit).

Run minikube status to

Use the kubectl command-line tool to interact with the Minikube cluster. For example, you can run the following commands to get cluster information:

kubectl cluster-info

kubectl get nodes

Deploying our backend and database to Minikube

backend-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend-deployment

spec:

replicas: 2

selector:

matchLabels:

app: backend

template:

metadata:

labels:

app: backend

spec:

containers:

- name: backend-container

image: my-backend-image:latest

ports:

- containerPort: 3000

This YAML manifest creates a Deployment named backend-deployment with 2 replicas. It specifies a pod template with a container named backend-container running the my-backend-image Docker image on port 3000.

backend-service.yaml

apiVersion: v1

kind: Service

metadata:

name: backend-service

spec:

selector:

app: backend

ports:

- protocol: TCP

port: 3000

targetPort: 3000

This YAML manifest creates a Service named backend-service. It selects pods with the label app: backend and exposes port 3000, which forwards traffic to port 3000 on the selected pods.

database-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: database-deployment

spec:

replicas: 1

selector:

matchLabels:

app: database

template:

metadata:

labels:

app: database

spec:

containers:

- name: database-container

image: my-database-image:latest

ports:

- containerPort: 27017

This YAML manifest creates a Deployment named database-deployment with 1 replica. It specifies a pod template with a container named database-container running the my-database-image Docker image on port 27017.

database-service.yaml

apiVersion: v1

kind: Service

metadata:

name: database-service

spec:

selector:

app: database

ports:

- protocol: TCP

port: 27017

targetPort: 27017

This YAML manifest creates a Service named database-service. It selects pods with the label app: database and exposes port 27017, which forwards traffic to port 27017 on the selected pods.

To deploy these manifests in your Minikube cluster, apply them using the kubectl apply command:

kubectl apply -f backend-deployment.yaml

kubectl apply -f backend-service.yaml

kubectl apply -f database-deployment.yaml

kubectl apply -f database-service.yaml

To configure our horizontal pod autoscaling (HPA) in Kubernetes using a YAML manifest:

hpa.yaml

apiVersion:

autoscaling/v2beta2kind:

HorizontalPodAutoscalermetadata:

name: my-app-hpaspec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app-deployment

minReplicas: 2

maxReplicas: 5

metrics: - type: Resource

resource: name: cpu

target: type: Utilization

averageUtilization: 70

To apply this HPA manifest, run the following command:

kubectl apply -f hpa.yaml

To handle cron Jobs:

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: data-backup

spec:

schedule: "0 0 * * *" # Runs at midnight every day

jobTemplate:

spec:

template:

spec:

containers:

- name: data-backup

image: my-data-backup-image:latest

# Specify other container configuration

restartPolicy: OnFailure

In this example, a Cron Job named data-backup is created, which runs the my-data-backup-image Docker image at midnight every day. The restartPolicy is set to OnFailure, meaning that if the job fails, it will be automatically restarted.

Here's another example of a yml file for a deployment with liveness and readiness probes:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-deployment

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app-container

image: my-app-image:latest

# Specify other container configuration

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 10

periodSeconds: 15

readinessProbe:

httpGet:

path: /readiness

port: 8080

initialDelaySeconds: 5

periodSeconds: 10

To perform a rolling update, you can update the image version of the deployment or change other configuration settings. Kubernetes will automatically create new pods with the updated configuration and gradually terminate the old pods.

kubectl set image deployment/my-app-deployment my-app-container=my-app-image:v2

That's all about maintaining container applications via Kubernetes. For more details on Kubernetes, check out Kesley Hightower- Kubernetes the Hard Way.

Subscribe to our blog to ramp up your engineering processes, and container security & enhance developer productivity.