Everywhere you turn in the IT operations circle, the topic du jour is GitOps. Lately, there’s been a lot of chatter about infrastructure automation.

A few years ago, the Operations team in an IT organization used to spend days, weeks, and months provisioning new or modified IT infrastructure environments. Fast forward to today, this timeline is cut down to merely hours for even large-scale applications.

How?

It’s the magic of GitOps-led IT infrastructure automation. GitOps saves teams some crucial bucks and plenty of time to further invest in the SDLC process — making the business more sustainable, robust, and resilient.

Read this insight to understand in detail what’s GitOps, its principles, benefits, and a high-level workflow of the GitOps process.

What’s GitOps?

GitOps is the umbrella term given to the set of processes, practices, and techniques that help automate infrastructure designing, development, and management with declarative code that is version-controlled using Git.

To understand the GitOps way of infrastructure management, it is important to understand how things are/were without GitOps.

Ops Before GitOps

Earlier, a system administrator with fair knowledge of networking, server, and storage hardware devices used to design, deploy, monitor, and manage IT infrastructure. They also look after the compliance, security, performance, and scalability aspects of IT infrastructure. Most of them used to discharge these duties manually or by writing bash scripts or using a GUI interface. It used to be a highly time-intensive process and in case anything went wrong, rolling back was a never-ending pain in the spine.

After that, we entered into the age of cloud infrastructure & DevOps. While on the one hand, it was solving the challenges of scalability & performance, but on the other hand it was also making it challenging for the Ops teams to keep up with the development team’s release velocity.

Evolution of GitOps

Building cloud-native applications for dozens of different environments, and platform-specific implementation requirements were making things further complex for Ops. Software as a service (SaaS) was rising in popularity, but managing multiple cloud service environments (esp in the hybrid cloud) was becoming a big pain in the SaaS.

Though there existed configuration management (CM) tools like Puppet & Chef easily recreated an environment, they were not mature enough to provision new VMs in the cloud-native infrastructure.

New offerings from AWS (Cloudformation) and Azure (ARM) solved the challenge of provisioning VMs in the cloud, but they were platform-locked i.e., Cloudformation could only be used with AWS, and ARM with Azure.

That’s where platform-agnostic tools like Ansible & Terraform entered into the game. Also, containerization (thanks to Google), Infrastructure as code (IaC), and API-led cloud-native infrastructure management rose in popularity. In a way, all these help put the DevOps theory into action.

Ops With GitOps

As defined earlier,

“GitOps is the umbrella term given to the set of processes, practices, and techniques that codify and automates infrastructure designing, development, and management with declarative code that is version controlled using Git.”

So, now developers themselves can specify the state of the container environment they want for their application in a key-value structured file. And the system automatically attempts to attain the specified state. This means, the application code, the system code, and the security & compliance declarations — all reside in the same repository, and thus applications can be shipped quickly & reliably to production.

How GitOps Work?

There are three main components/principles of the GitOps framework or workflow:

1. Infrastructure as Code (IaC)

- Your infrastructure code that defines the container state lives in the same repository alongside the application code.

- The infrastructure code is declared as either JSON or YAML files and is kept inside the .git folder of the repository.

- Tech teams using GitOps often use Kubernetes as their orchestration tool. And the aforementioned YAML or JSON files, are often referred to as ‘Kubernetes deployment manifests’.

- These deployment manifests describe how to create and manage a set of identical replicas of a specific application or microservice in a Kubernetes cluster.

- Deployment manifests contain the desired state of the deployment, the number of replicas, the container image to use, environment variables, ports, and other configuration settings.

- Each one of these is declared as a key-value parameter.

- For instance, the desired state of a web server might include specific software versions, configuration settings, and security policies. Similarly, the desired state of a database cluster might include the number of replicas, replication settings, and backup policies.

2. Merge Requests (MRs)

- Using merge requests or pull requests, you can push any changes that are required in the infrastructure configuration by modifying the JSON or YAML files.

- Like in DevOps, teams can review the infrastructure code, add comments, and collaborate before pushing the code for approval or triggering the CI/CD pipeline.

- If needed, you also have, for your reference, the audit log of previous MRs for the infrastructure code. This is helpful to roll back to a previously working state.

3. CI/CD pipeline

- As soon as the changes to the infrastructure code get approved and the MR is raised, it triggers the CI/CD pipeline.

- This CI/CD pipeline is triggered via a webhook that continuously listens for any new MRs or PRs on your Git repository — which could be GitHub, Gitlab, Bitbucket, CircleCi, etcetera.

- Your CI pipeline automatically builds & tests the changes, and creates a new version of the deployment artifact, and stores it in a container registry.

- Your CD tool continuously monitors the registry for new artifacts. Argo CD is one such declarative GitOps CD agent for the Kubernetes platform.

- Once you push the code, Argo CD detects it automatically builds the container image, and deploys the container image to the Kubernetes cluster.

- Kubernetes takes care of the number of replicas of the specified image, and their health too.

The above workflow is often referred to as push-based deployment. The alternative to push-based deployment is ‘pull-based deployment’. The pull-based mechanism is the same as the push-based, the only difference is that the deployment pipeline is replaced with an operator in the pull-based approach.

- This operator continuously monitors the environment repository for any changes and automatically keeps updating the infrastructure to match the same.

- Additionally, the operator also monitors the active state of the infrastructure and matches it to what’s specified in the environment repository. If there are any discrepancies, the operator updates the infrastructure (call it rollback) to match the desired state in the environment repository.

[ Read: CircleCI Features For Faster Deployments ]

GitOps Tools / Stack

Version Control Tool

Git, SVN (not recommended)

Git Management Tool

GitLab, GitHub, BitBucket, Azure DevOps

Continuous Integration Tool

GitLab, Jenkins, Circle CI, Bamboo

Continuous Delivery Tool

Argo CD, Flux, Spinnaker

Container Registry

Docker Hub, AWS ECR, Google GCR, Azure ACR

Configuration Manager

Puppet, Chef, SaltStack, TerraForm

Infrastructure Provisioning

AWS Cloudformation, Azure ARM, Google CDM, HashiCorp Terraform, Kubernetes native tools (kops, eksctl)

Container Orchestration

Kubernetes, Docker Swarm, Amazon EKS, Google GKE

Benefits of GitOps

GitOps principles are rooted in declarative systems, versioned system states, and automation. A lot of benefits emanate from these three—

Easy Infrastructure Management

Like the DevOps SDLC workflow, it is implemented using Git & CI/CD pipeline. This helps to make changes easily to the infrastructure. You can collaborate and review the infrastructure declarative code with the team, and obtain necessary permissions, or troubleshoot, audit, and roll back (if needed).

Increased Velocity

Thanks to the automation, CD agent, and Kubernetes operator, the Infrastructure state is always as defined in the environment repository. Hence, the Ops team doesn’t have to spend hours fixing the environment, and the development teams do not have to wait for the same to see the application in production.

High Reliability, Scalability

As the infrastructure is managed by code, it is easy to replicate it horizontally, thus ensuring high scalability of the infrastructure. Also, because it is versioned, rolling back to a previous state is just as simple as rebasing the last change. This ensures high availability and reliability of the system as well.

Enhanced Security & Compliance

In the deployment manifest YAML or JSON files, you may also specify the security policies and compliance rules to ensure that your system is highly secure. Besides you can streamline the authentication & authorization process with Kubernetes Secrets to handle sensitive information like passwords, access keys, and certificates.

GitOps vs DevOps — What’s The Difference?

DevOps is a set of cultural practices that SDLC operations teams imbibe to unlock greater flexibility, productivity, and efficiency to ship higher-quality products to the users.

On the contrary, GitOps is a set of operational activities that makes use of CI/CD pipelines, Git version control system, automation & IaC to provision, monitor, and manage infrastructure at scale.

They are fundamentally not the same. DevOps is a practice and is a set of activities. But you won’t be wrong to say that, “GitOps is the implementation of DevOps practices to the IT infrastructure systems to manage them at scale”.

The Final Commit

Organizations that are actively looking to improve their development processes and developer experience, must try GitOps to streamline infrastructure management and do away with dreaded releases. By putting the code in command of your infrastructure system you’re saying ‘YES’ to reliable, scalable, and secure infrastructures, and you’re saying ‘NO’ to error-prone manual deployment steps & processes. Given the benefits of GitOps, especially the security, speed, and scalability benefits, it does seem like the future of infrastructure management.

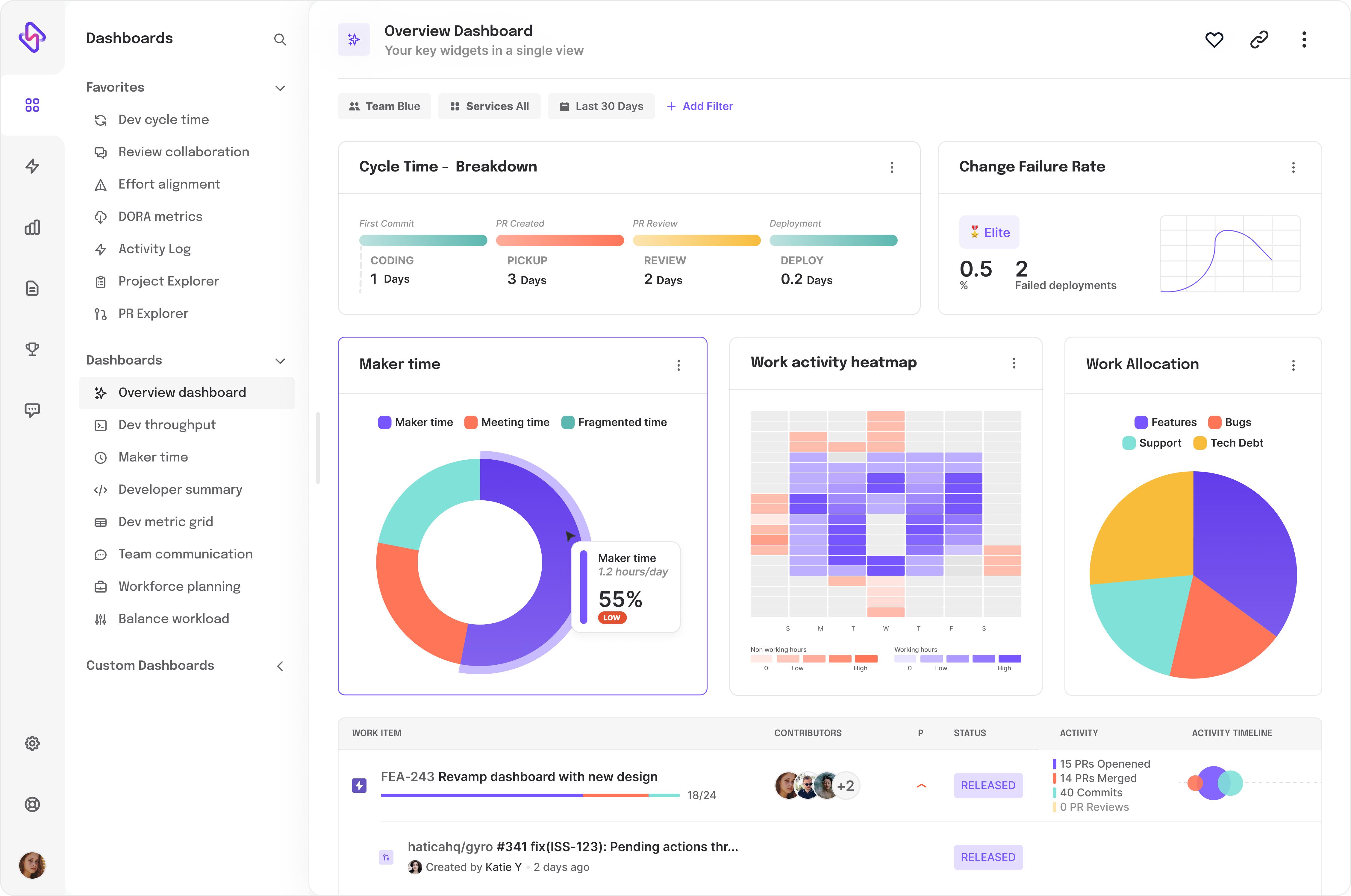

By the way, if you’re a fan of ensuring developer well-being while unlocking higher productivity, and haven’t still tried the engineering analytics platform ‘Hatica’, believe me, you’re missing out on an amazing product for your engineering teams!